Intel Xeon W-3400 Content Creation Preview

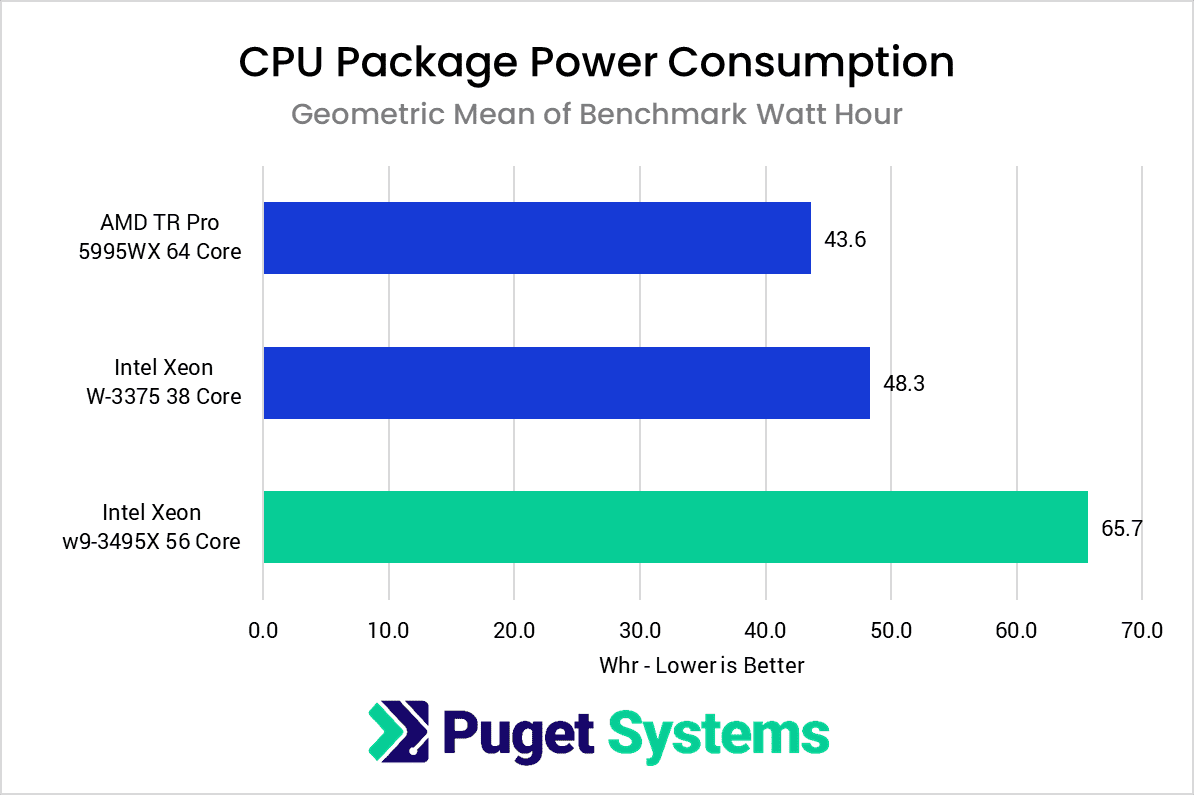

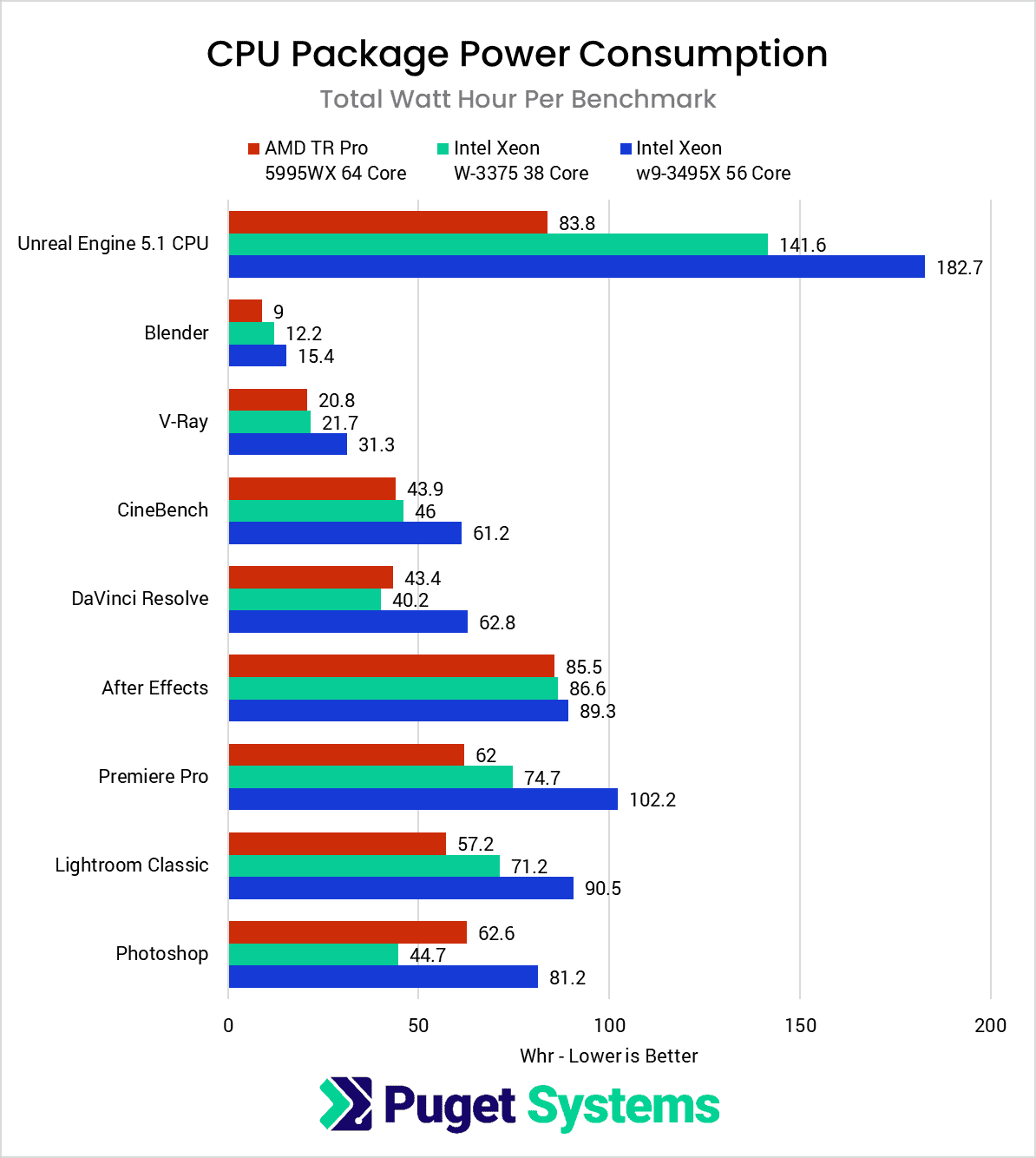

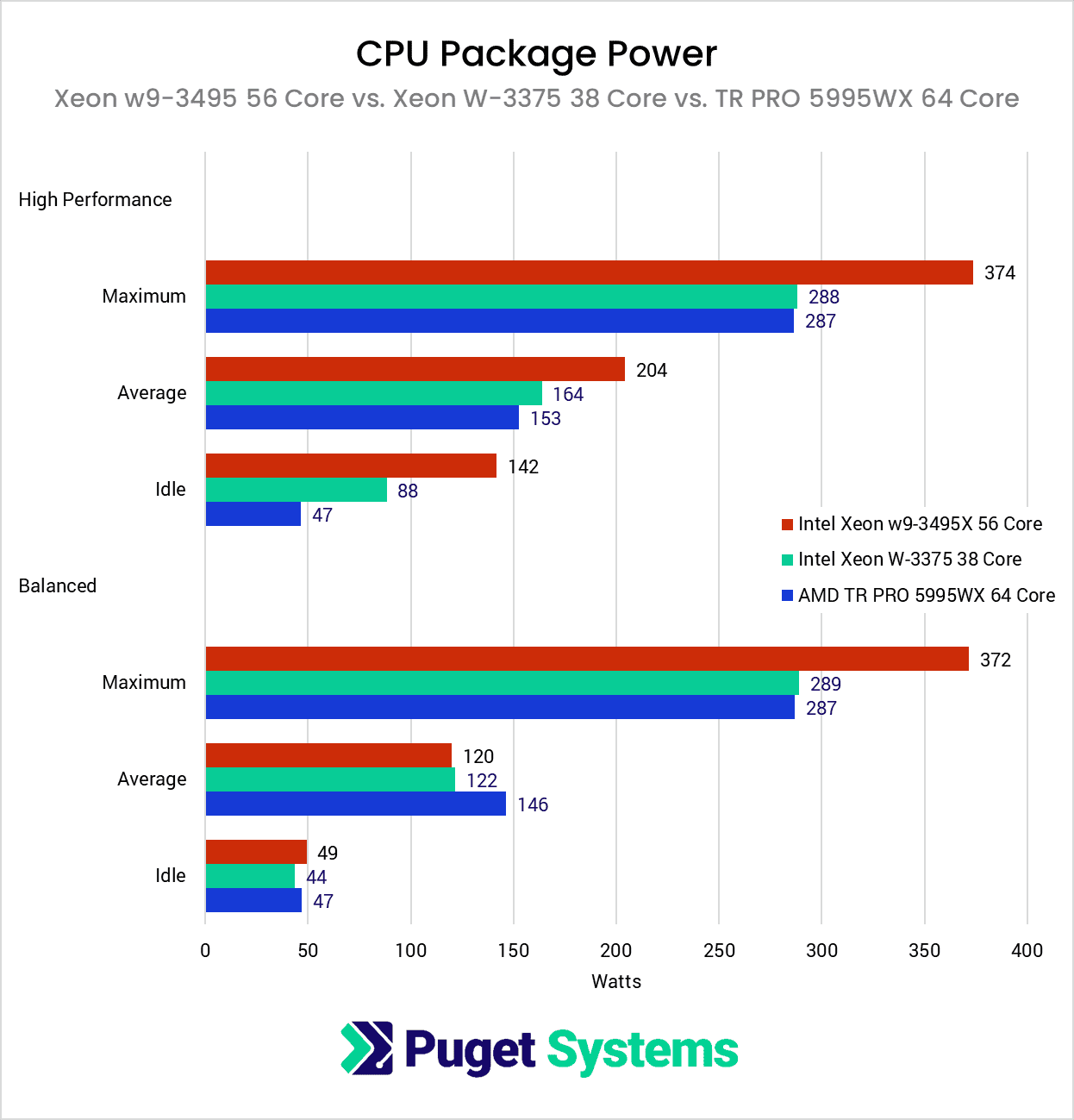

https://www.pugetsystems.com/labs/articles/intel-xeon-w-3400-content-creation-preview/For Intel, however, the idle power draw in particular increases significantly. On the previous generation Xeon X-3300 series, we are looking at about a 2x increase, going from 44W to 88W on the Xeon W-3375 38 Core. The difference is even larger on the new Xeons, with the w9-3495 going from 49W to 142W – nearly a 3x increase in idle power draw.

This is going to impact the system in two main ways: the cooler is going to have to work harder at idle (and thus be a small amount louder), and it will cost a bit more money to run your system. The raw costs aren’t that high in the context of the price of a system of this level (assuming it is idle 24/7/365, and energy cost is 15 cents per kWh, that is only a maximum difference of about $120 per year), but it is still a factor to consider.

With this information, we decided to present the results in this article using only the “High Performance” Windows power profile. We actually did all our testing with both profiles to see how much of an impact it made on each CPU, but since Intel Xeon benefits to much from using the “High Performance” profile, we are going to (for now) stick to presenting just those results.

no modo high performance 142w em idle

coisas estranhas, especialmente a parte da redução do número de tiles.

coisas estranhas, especialmente a parte da redução do número de tiles.