Nemesis11

Power Member

128 Cores com 16 MB de L3, deve ser dos Processadores mais desequilibrados que já apareceram no mercado.

Se eles o fizeram, deve haver clientes que o precisam, mas em muitos Workloads, a performance vai sofrer bastante.

Anyway.......

Alibarba Yitian 710:

https://www.alibabacloud.com/press-...er-chips-to-optimize-cloud-computing-services

Se eles o fizeram, deve haver clientes que o precisam, mas em muitos Workloads, a performance vai sofrer bastante.

Anyway.......

Alibarba Yitian 710:

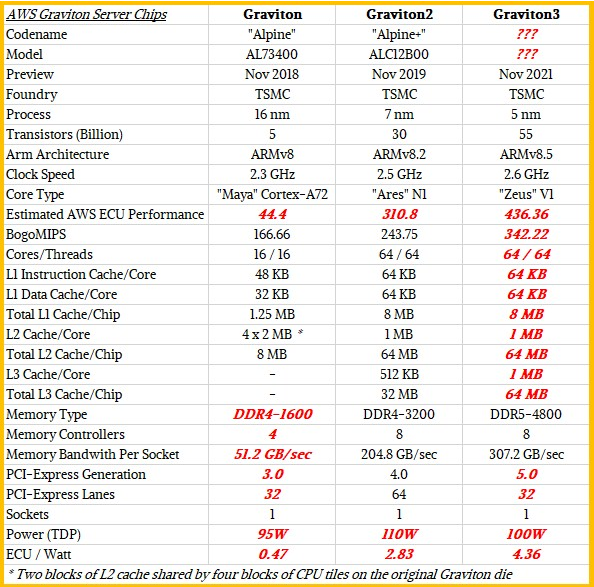

- 128 Cores ARM v9

- 60 mil milhões de Transistores

- 3.2 Ghz

- 8 Canais DDR5

- 96 Lanes Pci-Ex

- TSMC 5 nm

- SPECint2017 de 440 (Igual a 2 Xeon Platinum 8362 64 cores at 2.80 GHz)

- "the Yitian 710 is 20% faster and 50% more energy efficient than the current state-of-the-art ARM server CPUs" (Seja lá qual for....)

https://www.alibabacloud.com/press-...er-chips-to-optimize-cloud-computing-services